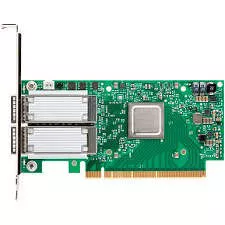

Mellanox MCX416A-CCAT ConnectX-4 EN Network Card, 100 GbE Dual-Port QSFP, PCIe 3.0 x16

SabrePC B2B Account Services

Save instantly and shop with assurance knowing that you have a dedicated account team a phone call or email away to help answer any of your questions with a B2B account.

- Business-Only Pricing

- Personalized Quotes

- Fast Delivery

- Products and Support

$911.92

Mellanox MCX416A-CCAT ConnectX-4 EN Network Card, 100 GbE Dual-Port QSFP, PCIe 3.0 x16

ConnectX®-4 EN network controller with 100Gb/s Ethernet connectivity, provide the highest performance and most flexible solution for high performance, Web 2.0, Cloud, data analytics, database, and storage platforms.

With the exponential growth of data being shared and stored by applications and social networks, the need for high-speed and high performance compute and storage data centers is skyrocketing. ConnectX-4 EN provides exceptional high performance for the most demanding data centers, public and private clouds, Web2.0 and BigData applications, and Storage systems, enabling today's corporations to meet the demands of the data explosion.

ConnectX-4 EN provides an unmatched combination of 100Gb/s bandwidth in a single port, the lowest available latency, and specific hardware offloads, addressing both today's and the next generation's compute and storage data center demands.

I/O VIRTUALIZATION

ConnectX-4 EN SR-IOV technology provides dedicated adapter resources and guaranteed isolation and protection for virtual machines (VMs) within the server. I/O virtualization with ConnectX-4 EN gives data center administrators better server utilization while reducing cost, power, and cable complexity, allowing more Virtual Machines and more tenants on the same hardware.

OVERLAY NETWORKS

In order to better scale their networks, data center operators often create overlay networks that carry traffic from individual virtual machines over logical tunnels in encapsulated formats such as NVGRE and VXLAN. While this solves network scalability issues, it hides the TCP packet from the hardware offloading engines, placing higher loads on the host CPU. ConnectX-4 effectively addresses this by providing advanced NVGRE, VXLAN and GENEVE hardware offloading engines that encapsulate and de-capsulate the overlay protocol headers, enabling the traditional offloads to be performed on the encapsulated traffic. With ConnectX-4, data center operators can achieve native performance in the new network architecture.

ASAP2 ™

Mellanox ConnectX-4 EN offers Accelerated Switching And Packet Processing (ASAP2 ) technology to perform offload activities in the hypervisor, including data path, packet parsing, VxLAN and NVGRE encapsulation/decapsulation, and more.

ASAP2 allows offloading by handling the data plane in the NIC hardware using SR-IOV, while maintaining the control plane used in today's software-based solutions unmodified. As a result, there is significantly higher performance without the associated CPU load. ASAP2 has two formats: ASAP2 Flex™ and ASAP2 Direct™.

One example of a virtual switch that ASAP2 can offload is OpenVSwitch (OVS).

RDMA OVER CONVERGED ETHERNET (RoCE)

ConnectX-4 EN supports RoCE specifications delivering low-latency and high-performance over Ethernet networks. Leveraging data center bridging (DCB) capabilities as well as ConnectX-4 EN advanced congestion control hardware mechanisms, RoCE provides efficient low-latency RDMA services over Layer 2 and Layer 3 networks.

MELLANOX PEERDIRECT™

PeerDirect™ communication provides high efficiency RDMA access by eliminating unnecessary internal data copies between components on the PCIe bus (for example, from GPU to CPU), and therefore significantly reduces application run time. ConnectX-4 advanced acceleration technology enables higher cluster efficiency and scalability to tens of thousands of nodes.