Introduction

While GPUs often take center stage in machine learning, a high-performance CPU is equally essential. The CPU manages critical tasks like data preprocessing, memory transfer, and task coordination, feeding data to the GPU and keeping the entire pipeline running smoothly.

Without a capable CPU, even the most powerful graphics cards can be underutilized. In this blog, we’ll explain why CPU performance matters in machine learning and share our top recommendations for building a balanced, high-performance system.

Why CPU Performance Matters in Machine Learning

Machine learning relies on vast amounts of data that must be collected, stored, retrieved, and processed, the CPU’s job. It handles file I/O, memory management, and data distribution to accelerators, storage devices, and the network.

In scenarios where data is frequently updated, such as collaborative spreadsheets with embedded algorithms, a suitable CPU makes a difference. Here, a fast CPU significantly improves performance by handling quick reads, writes, and transfers.

While GPUs are optimized for large-scale parallel computation—like neural networks and matrix math—the CPU orchestrates the broader system. A well-matched CPU ensures your GPU operates at full capacity and that your overall machine learning workflow remains efficient.

What Are the Best CPUs for Machine Learning?

We recommend AMD Threadripper PRO 9000WX Series and AMD EPYC 9005 Series processors for machine learning due to their high core counts and excellent performance over the competition. Here is our reasoning why:

When selecting a CPU for ML or AI systems, two factors stand out: core count and cache capacity.

- High core count enables faster data distribution and better multitasking, especially when paired with multiple GPUs.

- High cache capacity allows for quicker access to data and reduces the memory latency that occurs when pulling from system memory during heavy compute operations.

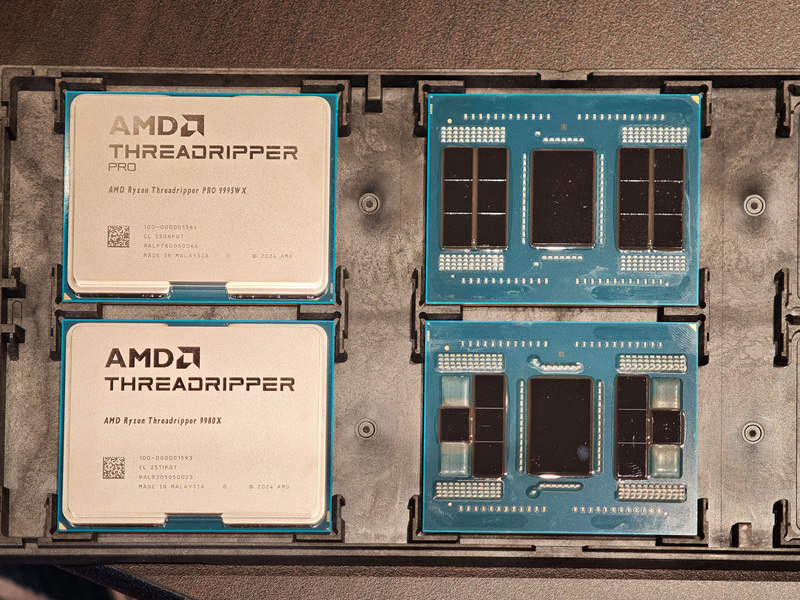

Best Workstation CPU for Machine Learning: AMD Ryzen Threadripper PRO 9995WX

For high-end desktop and workstation builds, the AMD Ryzen Threadripper PRO 9000WX series, particularly the 9995WX, is the best choice for machine learning developers if you want the most cores and the most cache.

Built on the advanced Zen 5 architecture and manufactured using TSMC's 4nm process, the 9995WX features:

- Up to 96 cores and 192 threads

- Support for 128 PCIe Gen 5.0 lanes

- Massive L3 cache capacity at 480MB for rapid data access

- Support for eight-channel DDR5 memory for high memory bandwidth

Other AMD Threadripper PRO 9000WX processors also offer ample PCIe lanes and the same memory support. However, the lower you go down the stack, the less the L3 cache.

| Model/Specs | Architecture | Socket | Cores | Threads | Max Boost | Base Clock | Default TDP | L3 Cache | Total PCIe Lanes | Memory |

|---|---|---|---|---|---|---|---|---|---|---|

| AMD Ryzen Threadripper PRO 9995WX | Zen 5 | WRX90 | 96 | 192 | 5.4GHz | 2.5GHz | 350W | 480MB | 128 PCIe 5.0 | 8-Channel DDR5 ECC 6400 MT/s |

This CPU offers outstanding scalability, letting you power multi-GPU configurations, high-speed storage arrays, and 100GbE networking, all on a single socket platform. It’s ideal for researchers, developers, and engineers working on large-scale AI models, training datasets, and inferencing workloads while having the flexibility of a workstation.

Check out our breakdown review of the AMD Ryzen Threadripper PRO and see for yourself how it stacks up.

Best Server CPU for Machine Learning: AMD EPYC 9755

For rackmount servers and enterprise ML deployments, the AMD EPYC 9005 series, specifically the 9755, delivers world-class performance tailored for data-intensive workloads with the highest L3 cache.

Built on the Zen 5 architecture, the EPYC 9755 features:

- 128 cores and 256 threads on a single socket

- Largest L3 cache at 512MB for minimizing latency on large datasets

- Support for 12-channel DDR5 memory for extreme bandwidth

- PCIe Gen 5.0 lanes to enable high-throughput GPU and storage configurations

- Exceptional energy efficiency for dense server deployments

| Model/Specs | Architecture | Socket | Cores | Threads | Max Boost Clock | Base Clock | Default TDP | L3 Cache | Total PCIe Lanes | Memory |

|---|---|---|---|---|---|---|---|---|---|---|

| AMD EPYC 9755 | Zen 5 | SP5 | 128 | 256 | 4.1GHz | 2.7GHz | 500W | 512MB | 128 PCIe 5.0 | 12 Channel DDR5 ECC 6400 MT/s |

As of Q3 2025, AMD EPYC 9005 does not have a 3D V-Cache variant. Nonetheless, EPYC 9005 series is designed for data center applications that demand both throughput and scalability, making it a natural fit for machine learning training clusters, AI inferencing nodes, and hybrid HPC/AI environments.

Check out our release review of AMD EPYC 9005 for more information.

Other CPUs That are Good for Machine Learning and AI

- AMD EPYC 9004X - Featuring 3D V-Cache, last generation AMD EPYC still features the most L3 cache in a single socket. If your workload hinges on memory latency over per core performance, choose EPYC 9004X.

- AMD Threadripper 9000X - The non-PRO variant of the AMD Threadripper 9000 Series now features 80 full PCIe 5.0 lanes for better I/O than last generation’s 48 lanes of PCIe 5.0. Threadripper 9000X workstations can now run effective quad-GPU PCIe 5.0 GPUs.

- Intel Xeon 6700E - Though a little less readily available, the Intel Xeon 6700 Series with E-cores is a super-efficient platform for distributed training and AI deployment. It doesn’t feature a large L3 cache unfortunately.

If you are unsure which processor to get based on the other components in your system, the best bet is to discuss what you want to do with a SabrePC engineer. We can recommend the best solution to optimize system performance based on your requirements.

You can also browse CPUs and Processors on the SabrePC website. For more information contact us directly.

Recommended GPU for Machine Learning

For most professional machine learning workloads, we recommend the NVIDIA RTX PRO 6000 Blackwell. With 96GB of GDDR7 VRAM, this GPU is built to handle massive datasets—whether you're working with large language models, high-resolution images, or video-based data.

While the NVIDIA H100 is a top-tier option, it's often cost-prohibitive and difficult to source. The RTX PRO 6000 Blackwell offers a more accessible alternative without sacrificing performance, making it ideal for high-end workstations powered by CPUs like the AMD Threadripper PRO.

Its high memory capacity and compute performance allow you to train and deploy deep learning models efficiently, especially when scaled in multi-GPU configurations.

Why You Still Need a GPU

While the CPU handles orchestration, data prep, and system-level tasks, the GPU does the heavy lifting. Thousands of cores are optimized for parallel processing—essential for matrix math, neural networks, and large-scale data inference.

In nearly every machine learning workflow, a powerful GPU dramatically reduces training time and enables real-time or batch inference at scale. CPUs and GPUs work best together, and for serious ML development, both are essential.

Conclusion

Building an effective machine learning system goes beyond just choosing the best GPU—it requires a balanced combination of high-performance CPUs and GPUs to fully unlock your model’s potential. While the GPU accelerates core compute tasks like training and inference, the CPU manages data movement, preprocessing, and system orchestration. Skimping on either can create a performance bottleneck that slows down your entire workflow.

For workstation users, the AMD Ryzen Threadripper PRO 9000WX series delivers unmatched core count, bandwidth, and expansion capabilities—ideal for power users working on complex models. On the server side, the AMD EPYC 9005 series offers extreme scalability and efficiency for data center and cloud-based AI workloads. Paired with a high-memory GPU like the NVIDIA RTX PRO 6000 Blackwell, you can build a machine that’s ready for the demands of today’s most advanced machine learning applications.

Whether you're training deep neural networks, running real-time inference, or exploring AI research, investing in the right CPU-GPU combination is key to staying productive and future-ready. Choose your components wisely, and build a system with SabrePC that scales as you grow. Contact us today for more information.

.jpg?format=webp)