During the NVIDIA GTC 2023 event, Jensen Huang first addresses Moore's Law: the number of transistors (and in theory performance) doubles every 2 years. Huang expresses concern for the slowdown and also acknowledges the rapid progress in computing technology. This year has marked a new era in technology, as accelerated computing has been driven by the Artificial Intelligence adoption by the mainstream.

NVIDIA has played a crucial role in this technological revolution by developing and widely implementing AI. A prime example is ChatGPT, which heavily relies on NVIDIA's data center accelerators to deliver speedy responses to user queries. Starting from gaming, all the way to powering the most complete models, let's take a look at some hardware highlights and announcements that Jensen addressed during GTC 2023.

Grace CPU

The Grace CPU is comprised of 72 Arm cores that are interconnected by a high-speed on-chip coherent fabric, which delivers an impressive 3.2 TB/sec. Te Grace Superchip is two of Grace CPUs on a single board, which features enhanced LPDDR. This configuration boasts a bandwidth of 1TB/s at an eighth of the power.

NVIDIA's latest offering, the Grace CPU, has been specifically designed for high-throughput AI workloads that cannot be processed through parallelized computing on GPUs. This CPU is built for cloud data centers and is highly energy efficient.

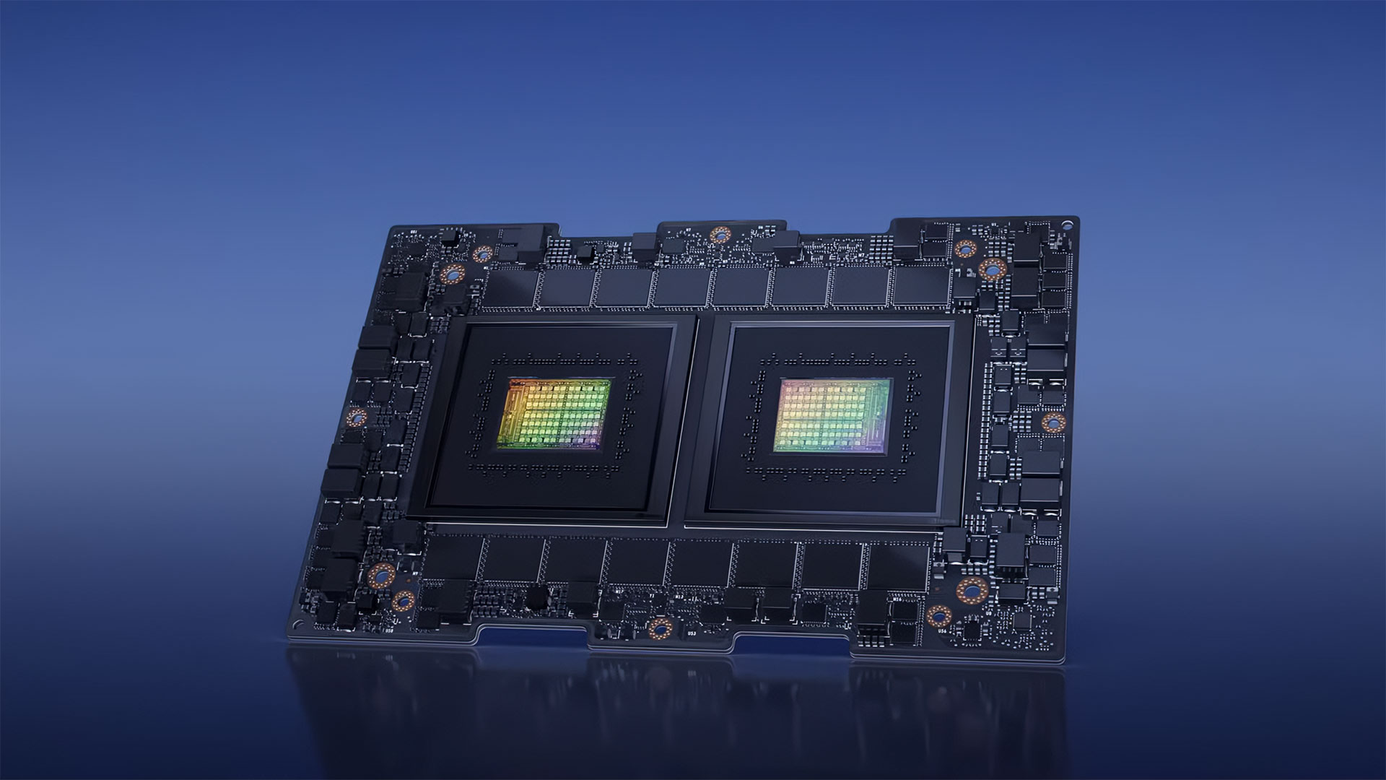

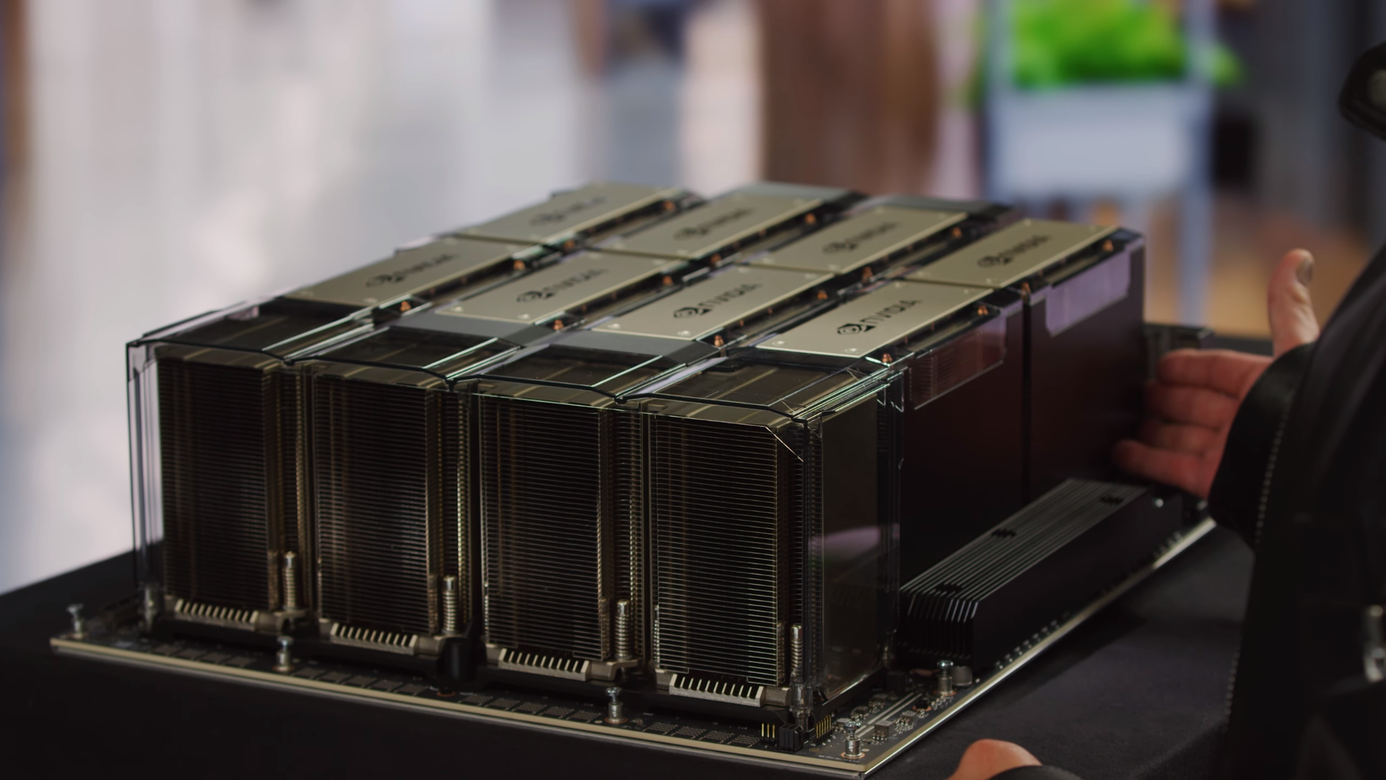

NVIDIA DGX H100

NVIDIA DGX H100 was announced at GTC 2022 and is now in full production. Superseding DGX A100, the DGX H100 is NVIDIA’s new AI engine of the world. NVIDIA has come a long way in providing accelerators to power the world's most insane innovations. DGX is an AI supercomputer and the foundation for developing large and complex models. With the advancements in H100, such as an added transformer engine DGX H100 enables around-the-clock operations and inferencing; one giant GPU to process data, train models, and deploy innovations.

To put these supercomputers in the hands of every corporation, NVIDIA launches NVIDIA DGX Cloud. Access NVIDIA’s most powerful systems instantly from a browser via NVIDIA AI Enterprise, their most complete suite of libraries for end-to-end training and deployment.

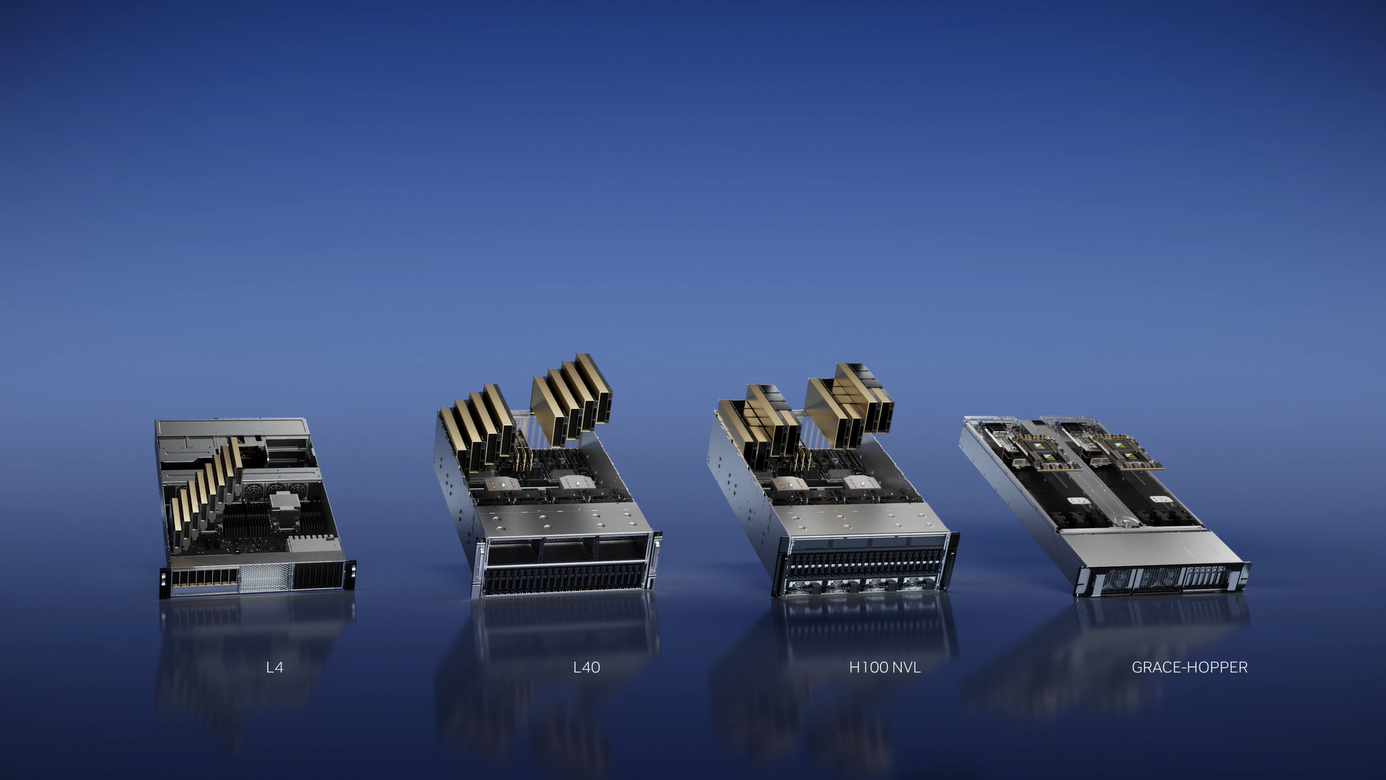

AI Accelerators for the Modern Day

Huang announced new platforms for AI video, image generation, LLM deployment, and recommender inference. They use NVIDIA's software and the latest Ada, Hopper, and Grace Hopper processors, including the new L4 Tensor Core GPU, and the H100 NVL GPU.

The NVIDIA L4 Tensor Core GPU is built to power AI Video and deliver 120x better performance than CPUs with 99% better energy efficiency. It's useful for cloud video processing and can replace over 100 dual-socket CPU servers. Companies that process countless video data can count on L4 for AV1 video processing, generative AI, and Augmented Reality.

The NVIDIA L40 (announced GTC 2022) is the engine for NVIDIA Omniverse. Its acceleration capabilities enable image generation and optimized graphics for AI-enabled 2D, video, and 3D image generation. It can help with creative projects by prompting changes like adding backgrounds, and special effects, or removing unwanted imagery like how DALLE2 and Stable Diffusion showed us late last year.

The H100 NVL is the Tensor Core GPU for Large Language Model Deployment, and it can support scaling and advancements of common PCIe servers found in data centers. Effectively a more powerful NVIDIA H100, the H100 NVL comes in pairs NVLinked together to deliver extremely high throughput with lightning-fast GPU interconnect. It's claimed to be 100 times faster than A100 and can reduce the processing costs of LLMs by an order of magnitude.

The Grace Hopper Superchip connects the Grace Arm CPU with the H100 GPU for the lowest latency between CPU and GPU. It's useful for processing giant datasets like recommender systems, vector databases, and graph neural network AI models.

Supercharging Generative AI

NVIDIA not only provides the platform but also the engine for individuals and corporations who want to develop generative AIs. Jensen Huang announced NVIDIA AI Foundations, a suite of cloud services that allow users to develop their own generative AI for specific tasks using their own data.

The AI Foundations services include NVIDIA NeMo (large language models) Picasso (visual content generative AI) and BioNeMo (drug screening and design). All of these services rely on NVIDIA's pre-trained models, which have been developed using trillions of parameters and data, and users will have access to experts to assist them as needed.

Wrapping Up

NVIDIA has transitioned from its gaming hardware days to being the engine that drives AI models from start to finish. Cloud and software partners, researchers and scientists, prosumers and consumers alike are all impacted by the recent development and mainstream adoption of artificial intelligence, especially with Generative AI. We give our computers and machines the ability to be more creative than before and the world is changing, moving toward the next technological revolution.

The Era of AI is on the horizon and it's up to us to keep up. Without the acceleration in computing and the engines NVIDIA provides, our technology would not be the way it is today. Innovations are made possible not only with the hardware and software but those who harness it.

Interested in scaling up your computing infrastructure?

Contact SabrePC Today!