What is Edge Computing?

Edge computing is deploying compute resources closer to data sources to process and analyze data at the point of data generation.

Imagine you're at a baseball game and you want to purchase food. Instead of having to leave your seat, stadium vendors walk around selling food to attendees. These vendors are closer and more accessible to the clientele, allowing you to make your purchase quickly. For more extensive options, you may still need to visit the main concession stand.

Similarly, edge computing is deployed where data is generated, making computational resources more accessible. When we need to handle a lot of data, we can send it to a central cloud or data center, which is like visiting the main concession stand. Edge computing brings the processing power closer to where the data is generated.

The Advantages of Edge Computing

Edge computing offers several key benefits that optimize data processing and network performance.

- Significantly reduces latency by processing data closer to its source, enabling real-time capabilities essential for IoT devices and smart home security systems.

- Maximizes bandwidth efficiency by only sending necessary data to the cloud, reducing network congestion and improving overall performance.

- These computing resources provide enhanced security and privacy protection for sensitive information by processing data on local hardware at the edge of the network. The system maintains functionality even during internet outages through offline operation, while its distributed nature enables both scalability and redundancy.

Edge devices enable real-world applications that require instant processing at the data source. For example, a self-driving car uses its onboard computer—an edge device—to make split-second decisions for safe navigation. By processing data closer to where it originates, edge computing provides the quick responses and consistent performance these applications need.

How Does Edge Computing Work?

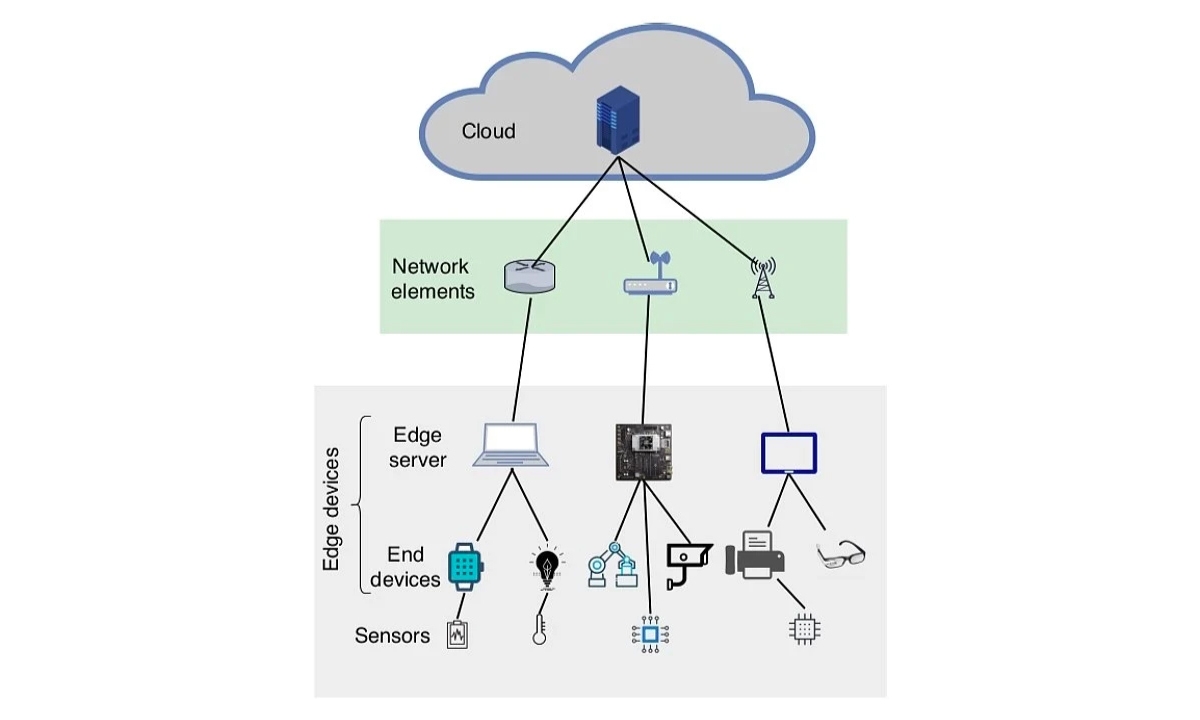

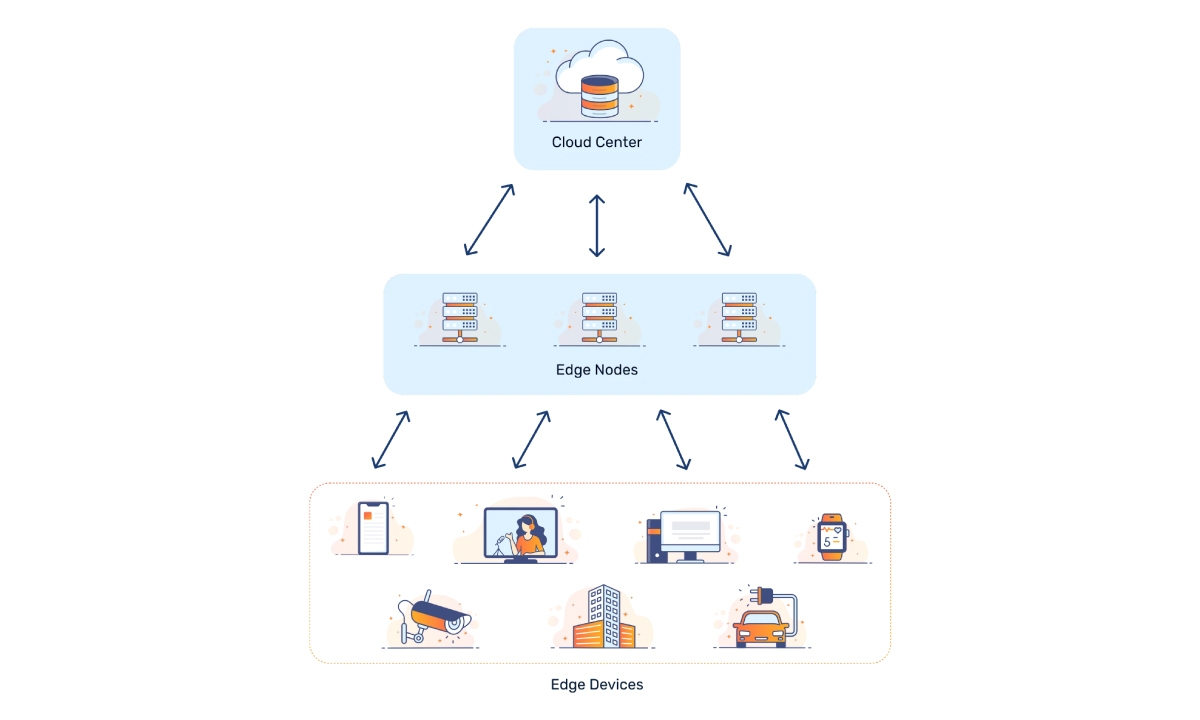

Edge devices act as data collectors and processors in the field, bringing computing power closer to data sources. These devices serve as the network's data processing nodes, handling information before sending it to the cloud or data center. Edge computing relies on three main types of devices:

- Sensors: Simple devices that detect environmental conditions like temperature, humidity, and movement. These are commonly used in agriculture, weather monitoring, smart homes, and healthcare.

- IoT (Internet of Things) devices: Smart devices that interact with their environment and connect to the internet, including security cameras, wearables, and self-driving cars. These devices enable real-time computing where minimal delay is crucial.

- Edge Servers: Powerful computing nodes that perform complex tasks like AI monitoring, computer vision, and real-time analysis directly at the data source.

The primary function of edge devices is local data processing. In a smart home setting, sensors throughout the house monitor environmental conditions and security. These devices process data on-site, filtering and aggregating information before transmitting it to the main hub.

This local processing approach offers significant advantages. Rather than sending raw data to distant servers, edge devices handle analysis locally. For example, a security camera system processes video feeds locally to detect motion or suspicious activity, only sending relevant clips to the cloud for storage rather than streaming all footage continuously.

Edge Servers and Accelerators: Powering Edge Computing Performance

Edge servers and hardware accelerators work together to form the backbone of modern edge computing infrastructure. Here's how they enable powerful local processing:

- Edge Servers

- Act as intermediaries between edge devices and cloud infrastructure

- Provide local processing, storage, and networking capabilities

- Handle complex computations using powerful processors and ample memory

- Enable real-time analysis and decision-making at the network edge

- Hardware Accelerators (like GPUs)

- Specialize in optimizing specific computations

- Excel at parallel processing for machine learning and computer vision

- Offload intensive tasks from general-purpose processors

- Significantly improve processing speed and system performance

For example, self-driving cars: these cars are equipped with onboard computers for making low-latency decisions. Edge servers are also deployed and equipped with GPU accelerators to aggregate and process data to send back to the data center for further training. This enables immediate decision-making for navigation and obstacle avoidance, while also extracting data without putting the burden on edge devices.

SabrePC offers a plethora of components to upgrade your computing infrastructure. With dedicated GPU-accelerated solutions and dense storage systems, leverage the exceptional benefits hardware can provide.

Security and Privacy Considerations

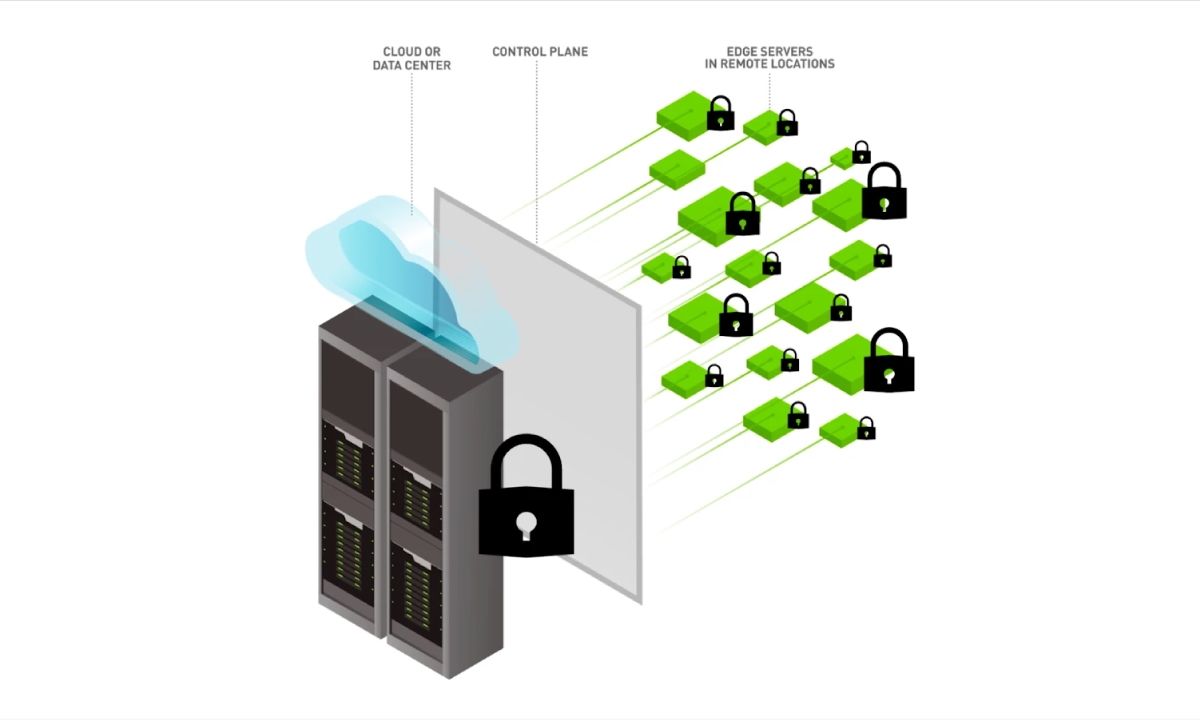

In the world of edge computing, hardware devices play a crucial role in safeguarding data security and privacy. These devices are equipped with advanced security features that work together to protect sensitive information from unauthorized access, with encryption techniques to convert data into a secure code to maintain confidentiality.

Looking Ahead: The Future of Edge Computing Hardware

As edge computing continues to evolve, hardware development faces both exciting opportunities and significant challenges. The industry is seeing rapid advancement in specialized hardware solutions, particularly in three key areas:

- Specialized Edge AI Processors

- Custom-designed processors that optimize AI and machine learning workloads at the edge

- Enhanced performance while maintaining energy efficiency. See AMD EPYC 8004 solutions.

- Emerging Architecture Solutions

- Neuromorphic computing systems that mimic brain functionality

- ARM-based processors offering improved power efficiency for edge devices. See Ampere Altra solutions.

While the potential is significant, several key challenges need addressing:

- Standardization and Integration

- A fragmented hardware ecosystem with multiple vendors and architectures

- Need for improved interoperability between different edge solutions

- Resource Management

- Balancing performance requirements with power constraints

- Scaling hardware deployment across numerous edge locations

The success of edge computing ultimately depends on overcoming these hardware challenges while continuing to innovate in processor design and system architecture. As the technology matures, we expect to see more efficient, powerful, and standardized hardware solutions emerge.

Need help selecting the right edge computing hardware for your application? Contact our engineering team for expert guidance.

Have any questions regarding what hardware you need in your next system?

Contact us today and speak to our experienced engineers.