Introduction

Image classification, powered by deep learning algorithms, has revolutionized how computers understand and process visual information. From medical diagnosis to autonomous vehicles, this technology continues to shape innovation.

Two main architectures are prevalent in image classification: traditional Convolutional Neural Networks (CNNs) and Vision Transformers (ViTs). In this blog, we explore the differences, which one to pick, and some popular models to use for your image classification project.

Core Architectures for Image Classification

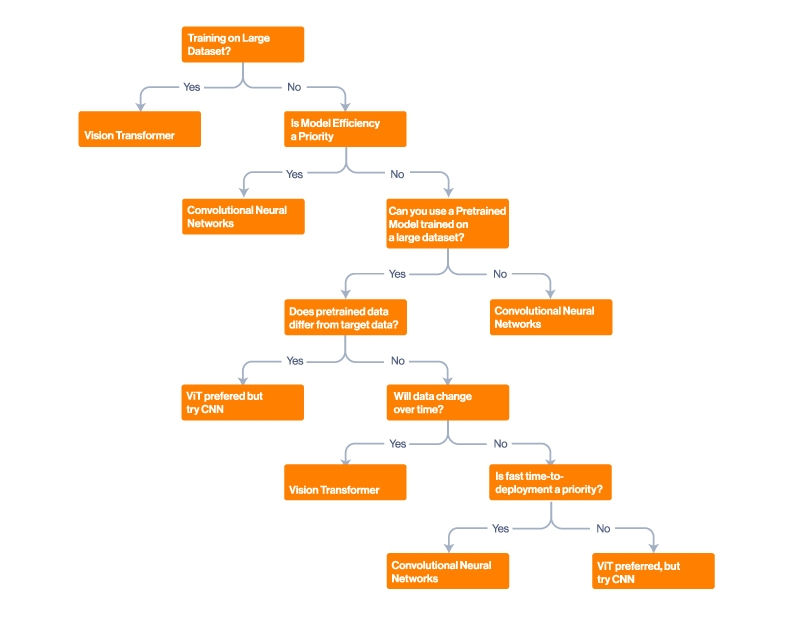

Modern image classification algorithms primarily use Transformers and Convolutional Neural Networks (CNNs). Their main difference is the incorporation of an inductive bias. Here's a quick cheat sheet decision tree for selecting one of the two models:

Inductive bias refers to local assumptions made by the model to generalize from training data to unseen data. These biases help the model learn efficiently and generalize better to new data. Key aspects include:

- Locality: Nearby pixels are more related than distant ones, helping detect local patterns.

- Spatial Invariance: Objects can appear anywhere in the image, achieved through pooling layers.

- Hierarchical Structure: Learning features from simple to complex across layers.

- Parameter Sharing: Using the same filters across the image to reduce parameters and improve generalization.

- Attention Mechanisms: Capturing relationships between distant parts of the image for global context.

CNNs assume that nearby pixels are related, whereas Transformers make no assumptions, weighing all pixels equally. For small datasets, this can work as an advantage, but over large amounts of data, locality can cause underfitting. However, there is a hybrid architecture that combines ViTs and CNNs that takes the best of both for more performance and efficiency.

Vision Transformers (ViTs)

ViTs split an image into patches and process them using transformer blocks, originally designed for language models. Transformers are inherently flexible with minimal inductive bias, meaning they make few assumptions about the inputs, which affects their performance on small datasets. However, they excel at modeling long-range dependencies and global context, especially when trained on large datasets. ViTs require immense training and computational resources to make highly accurate predictions, but can generalize and adapt with ease.

Pros | Cons |

|

|

Convolutional Neural Networks (CNNs)

CNNs have been the backbone of image classification for over a decade. CNNs excel at detecting local patterns and hierarchical features through their layered architecture, making them particularly effective for tasks that rely on spatial relationships. Examples include ConvNeXt, ResNet, EfficientNet, and more.

Their fixed architecture and weight-sharing mechanisms result in more predictable training dynamics compared to ViTs. However, this can limit their ability to adapt to complex global patterns. CNNs don't need huge datasets and typically require fewer computational resources during inference, making them a practical choice for real-world applications where deployment efficiency is crucial.

CNNs may seem outdated. But for a simple task like Image Classification, simple is good. CNNs are still the most popular Image Classification algorithm due to their lightweight, easy-to-deploy, and less data-dependent nature.

Pros | Cons |

|

|

Hybrid Approaches (CNN + Transformer)

Hybrid models combine the inductive bias of CNNs with the contextual power of Transformers. Examples include CoAtNet and TinyViT. These architectures typically use CNN-like operations at lower levels to process local features efficiently while incorporating transformer blocks at higher levels to capture global relationships.

This hierarchical approach allows them to maintain the computational efficiency of CNNs while achieving the flexibility of transformers. The modular nature of hybrid models also enables researchers to experiment with different combinations of CNN and transformer components, leading to continuous innovations in architecture design.

In fact, Hybrid Transformers have a better performance-to-efficiency ratio on medium-sized datasets, making them an excellent choice over pure transformers.

Pros | Cons |

|

|

Best Image Classification Models to Try in 2025

We used the paperswithcode.com list for the ImageNet Image Classification benchmark to see the best-performing models. While topping these charts is no easy feat, take the results with a grain of salt. Training a model to top these charts sometimes may not reflect its performance in other use cases. Competing models are only a couple of percent off.

Here are some popular models to experiment with for your next Image Classification project.

- Vision Transformers

- ViT & ViT-G: Both by Google, ViT and ViT-G are technically two different models trained with different-sized datasets. ViT is the original implementation. ViT-G is a next-generation, scaled-up version trained on a 3 billion image dataset for exploring large model scaling.

- Swin Transformers: A vision transformer model that computes self-attention within local windows and shifts the windows between layers to enable cross-window interaction. This design makes it efficient and scalable for high-resolution vision tasks.

- Convolutional Neural Networks

- ConvNeXt: A modern pure CNN inspired by Vision Transformers training techniques (LayerNorm, GELU, large kernel sizes). There is also a ConvNeXt V2.

- EfficientNet: Scales depth, width, and resolution with compound coefficients for optimal performance and efficiency.

- ResNet: The original and classic CNN. It has a strong support base because it is light and widely adopted. Has multiple parameter sizes for larger or smaller datasets. ResNet is also available in PyTorch's Torch Image Models.

- Hybrid Models

- CoAtNet: Combines convolution and self-attention in a unified architecture, capturing both local inductive bias and global context for strong generalization and scalability.

- TinyViT: A lightweight Vision Transformer optimized for mobile and edge devices, using local convolutions and attention to balance accuracy and efficiency.

- CvT (Convolutional Vision Transformer): Introduces convolutions into the token embedding and attention layers to incorporate spatial bias and improve learning on image data.

Industry Use Cases

Different industries leverage various image classification models based on their specific needs. In healthcare, CNNs are preferred for routine medical imaging like X-rays and MRIs, while transformers handle complex 3D imaging tasks. The automotive industry employs hybrid approaches for autonomous vehicles, balancing speed and accuracy.

- Healthcare: CNNs for basic scans, transformers for advanced imaging

- Autonomous Vehicles: Hybrid models for overall perception, CNNs for rapid obstacle detection

- Manufacturing: CNNs for production line inspection, transformers for complex verification

- Retail: Hybrid solutions for inventory systems, CNNs for basic product identification

Conclusion

While Vision Transformers showcase impressive capabilities in handling complex visual tasks, CNNs remain reliable workhorses for many practical applications. Hybrid approaches bridge the gap, offering a compelling middle ground that combines the strengths of both architectures.

When choosing a model architecture, consider three key factors:

- Dataset size and characteristics

- Available computational resources

- Deployment environment constraints

For most practical applications, especially those with limited datasets or computational resources, CNNs or hybrid models often provide the best balance of performance and efficiency. Vision Transformers shine in scenarios with abundant data and computational power, particularly for complex tasks requiring global context understanding.

Choosing a model is influenced first by data type and then by available computational resources. Vision Transformers require substantial GPU power, CNNs run efficiently on modest hardware, and Hybrid models fall somewhere in between.

At SabrePC, we ensure access to properly configured GPU workstations and servers for optimal performance, matching your workload. As these architectures evolve alongside hardware capabilities, success lies in finding the right balance between model choice and computational resources for your specific needs. Talk to us today to get a free quote on your next HPC workstation, server, and/or cluster.